Since September I have been working at the Sabancı Üniversitesi Cognitive Robotics Laboratory (CogRobo Lab) on a new project supported by the TÜBİTAK 2216 international post-doctoral fund. I was attracted to the CogRobo Lab because the work there focuses on the interface between “high-level” and “low-level” reasoning, and I am particularly interested in the relationship between physical and abstract cognition. The CogRobo Lab is also ambitious in terms of the potential applications of the technology we are developing there.

Since September I have been working at the Sabancı Üniversitesi Cognitive Robotics Laboratory (CogRobo Lab) on a new project supported by the TÜBİTAK 2216 international post-doctoral fund. I was attracted to the CogRobo Lab because the work there focuses on the interface between “high-level” and “low-level” reasoning, and I am particularly interested in the relationship between physical and abstract cognition. The CogRobo Lab is also ambitious in terms of the potential applications of the technology we are developing there.

The premise on which the CogRobo Lab was founded is the premise that logic programming is a software engineering tool with a lot of promise in allowing the specification of robot problems and the generation of abstract solutions to them. This promise relies in turn on logic databases’ promise of extensibility (roughly, due to the ability to add new facts to knowledge-bases), and the existence of increasingly powerful general purpose reasoners. Action planning is one area where logic programming is customarily applied in a robotics domain.

Unfortunately, in order to make such an approach work in real systems a lot of work must be done to bring these abstract formalisms into the real-world; they are difficult to apply in practice due to the vast number of “low-level” behaviours or computations necessary to make them work, and frequently these low-level computations are brought in as a range of special-purpose hacks, and when they are not hacks they do tend to remain special-purpose due to the time-consuming nature of their development (and due to the fact that there are many difficult problems still to be solved in this area).

As such the approach at the CogRobo Lab is to try and realise the promise of logic programming by exploring the necessary interfaces between the general purpose reasoning mechanisms and more special purpose ones. The idea is to create general purpose components and interfaces that can support logic programming as a robot programming paradigm. In the area of planning, hybrid logic programming is the name for this area of research and one approach to it particularly explored at present at the Cogrobo Lab is that of the external predicate or semantic attachment.

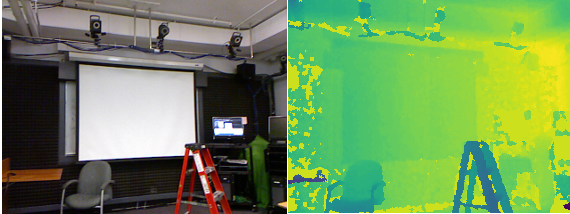

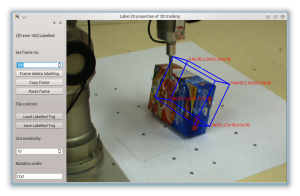

My own research at the lab is about investigating ways in which sensory processing components can be constructed so that they can be re-used by logic programs, so that they are reflexive to the information needed by planning (so that unnecessary sensory computation is not done, giving more room for more relevant expensive computation), and so that they are able to adapt to and possibly make use of low-bandwidth information supplied through the “high-level” interfaces (for example, verbal knowledge). Furthermore, they must be integrated with geometric, kinematic and dynamic reasoning (for example, motion planning, object stability analysis).

To this end I am investigating different paradigms of data association between sensory and high-level components, different procedural models of integration with planning, as well as reflexive sensory computation. All of this is in the context of mobile manipulation with a Kinect-equipped Kuka YouBot within the domain of the Robocup@Work competition, with difficult constrained stacking (e.g. shelving) problems.

The CogRobo Lab has its web-page here:

http://cogrobo.sabanciuniv.edu/

Since September I have been working at the Sabancı Üniversitesi Cognitive Robotics Laboratory (

Since September I have been working at the Sabancı Üniversitesi Cognitive Robotics Laboratory (